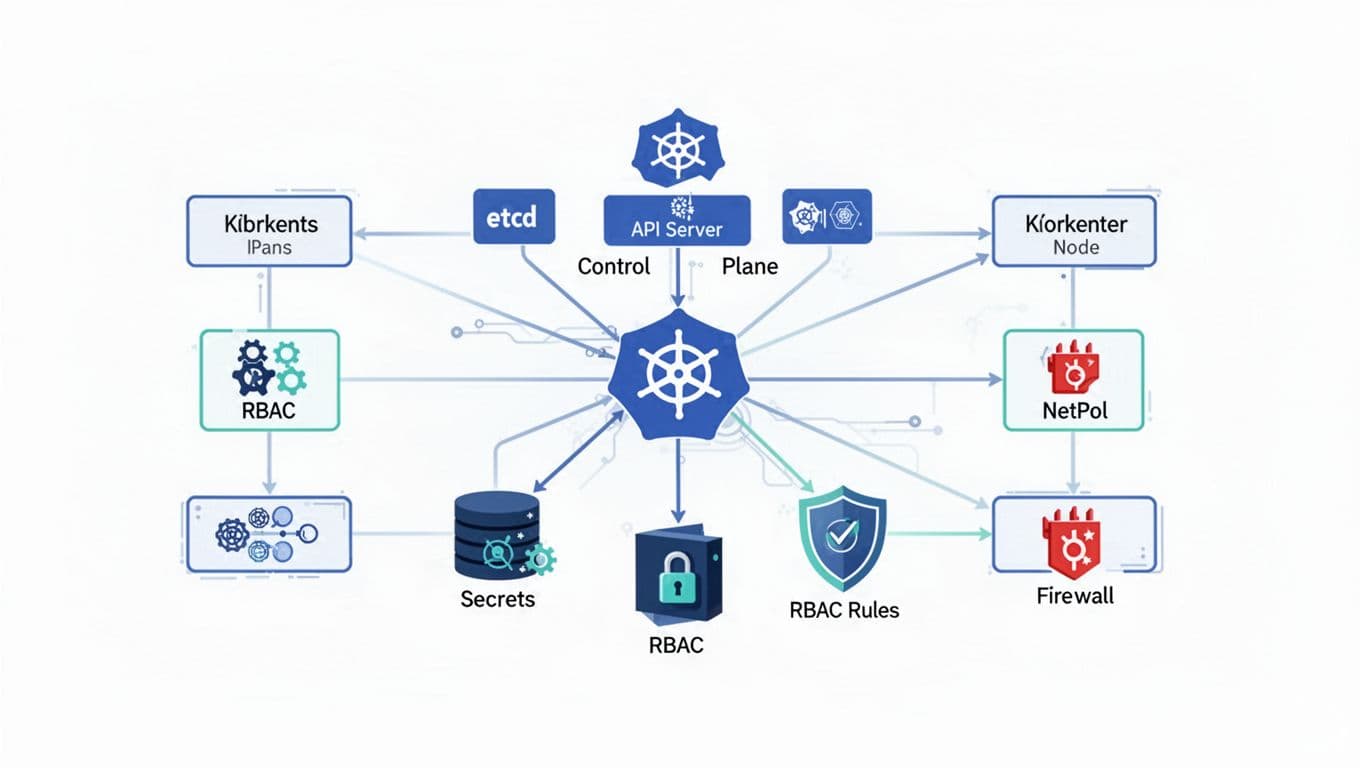

Production Kubernetes security rarely fails because of exotic exploits. It fails because one small permission is too broad, one Secret is exposed, or east-west traffic stays wide open.

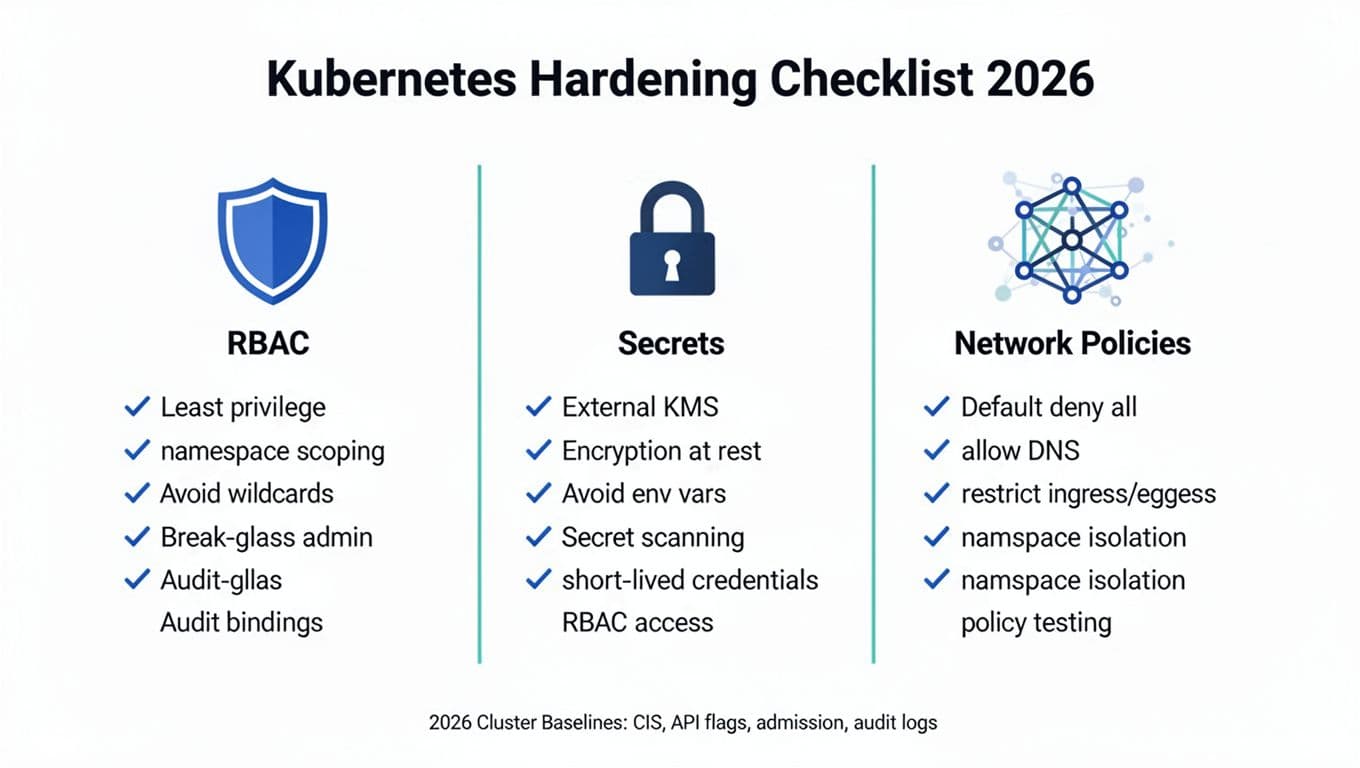

This kubernetes hardening checklist focuses on three controls that reduce real breach impact fast: RBAC, secrets handling, and NetworkPolicies. Use it as a practical playbook for 2026 clusters, whether managed or self-hosted.

Quick triage: the 10 highest-impact fixes to do first

Use this table when you inherit a cluster and need safety improvements today.

| Fix | Why it matters (risk and impact) |

|---|---|

Remove cluster-admin from humans and apps | Stops easy full-cluster takeover after a single credential leak. |

Kill wildcard RBAC (* verbs or resources) | Prevents privilege escalation paths you did not intend. |

Set automountServiceAccountToken: false by default | Reduces token theft from pods and SSRF-style attacks. |

| Move to short-lived SA tokens (TokenRequest) | Limits the value of a stolen token to minutes, not months. |

| Turn on Secret encryption at rest with KMS | Protects etcd backups and disk snapshots from becoming a breach. |

| Block Secrets in Git with secret scanning | Stops the most common “oops” leak from becoming permanent. |

| Rotate high-value credentials on a schedule | Shortens attacker dwell time and reduces blast radius. |

| Apply default-deny ingress and egress NetworkPolicies | Blocks lateral movement and “chatty” pods by default. |

| Allow DNS explicitly after default-deny | Avoids outages while keeping egress controlled. |

| Validate policies with real traffic tests | Prevents “it looks secure on paper” failures in production. |

If you can only do three things this week, pick: least-privilege RBAC, KMS-backed Secret encryption, and default-deny NetworkPolicies.

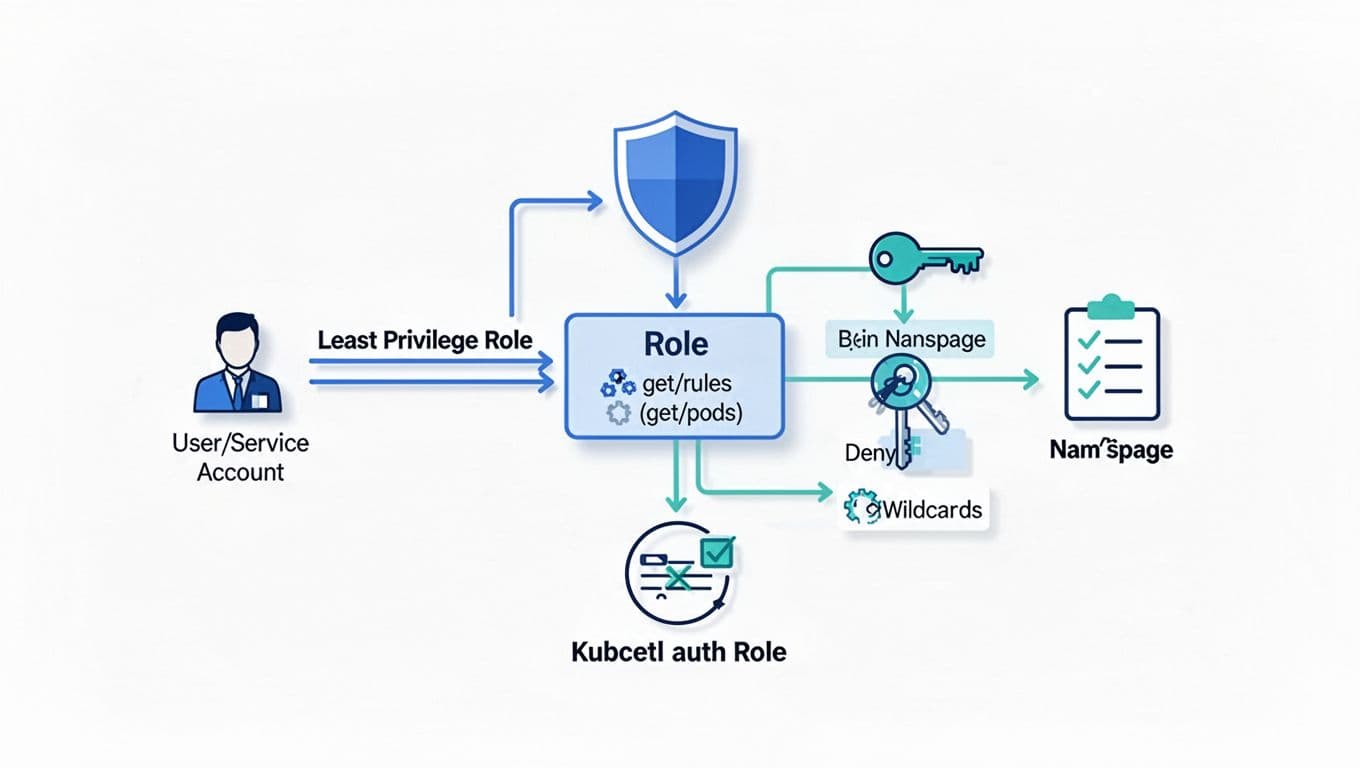

RBAC hardening that survives real incidents

Start with the official guidance on RBAC good practices and the reference for using RBAC authorization, then apply this checklist.

RBAC checklist (each item includes risk and impact):

- Prefer Roles over ClusterRoles: Namespace scope limits blast radius if a token leaks.

- Avoid

*inverbsandresources: Wildcards make audits meaningless and often allow privilege escalation. - Create “break-glass” admin access: Reduces day-to-day admin sprawl, while keeping incident access available.

- Lock down service accounts: Most real-world attacks use workload identity, not humans.

- Audit “who can do what” regularly: Finds drift before it becomes an incident.

Copy-pastable commands for quick audits:

- Check a specific action:

kubectl auth can-i create pods -n payments --as system:serviceaccount:payments:api - List allowed actions:

kubectl auth can-i --list -n payments --as jane@corp - Find bindings fast:

kubectl get rolebindings,clusterrolebindings -A -o wide

A minimal least-privilege Role and RoleBinding (line-by-line):

apiVersion: rbac.authorization.k8s.io/v1kind: Rolemetadata: { name: pod-reader, namespace: payments }rules:- apiGroups: [""]resources: ["pods"]verbs: ["get","list","watch"]apiVersion: rbac.authorization.k8s.io/v1kind: RoleBindingmetadata: { name: api-reads-pods, namespace: payments }subjects: [{ kind: ServiceAccount, name: api, namespace: payments }]roleRef: { apiGroup: rbac.authorization.k8s.io, kind: Role, name: pod-reader }

Service account token handling that reduces theft:

- Disable automount unless needed:

automountServiceAccountToken: false(risk: stolen tokens from any compromised pod). - Use short-lived projected tokens via TokenRequest (risk: long-lived tokens remain valid after exfiltration).

- Scope SA permissions to one namespace (risk: cross-namespace access turns one app bug into a cluster issue).

Secrets hardening: encryption, rotation, and runtime access

A Secret is not a vault. It’s a delivery mechanism. Treat it like handing someone a spare key, not storing the master key.

Start with the upstream Kubernetes Secrets documentation, then apply these controls.

Secrets checklist (with risk and impact):

- Encrypt Secrets at rest with KMS: Protects etcd and backups from offline compromise (impact: turns a snapshot leak into a non-event). A practical walkthrough is in Encrypt Secrets at rest with KMS.

- Use an external secret store for high-value keys: Reduces how often long-lived credentials sit in the cluster (impact: lowers breach scope). For managed clusters, compare provider guidance like encryption best practices for Amazon EKS.

- Keep plaintext out of Git and CI logs: Secret leaks become permanent once mirrored and cached (impact: long-term account compromise).

- Rotate on a schedule and after incidents: Rotation turns stolen secrets into expired secrets (impact: shortens attacker access).

- Restrict runtime access to Secrets: Limit

get/list/watchonsecretsto only the pods that must read them (impact: prevents “read-all-secrets” pivots).

Two practical habits that pay off fast:

First, avoid passing secrets through environment variables when you can. Env vars leak into crash dumps, debug output, and support bundles more often than teams expect.

Second, require a “read-secret” Role that is separate from “deploy app.” If your deploy bot can also read secrets, a CI compromise becomes a data breach.

NetworkPolicies: default-deny without breaking DNS

NetworkPolicies are your internal firewall rules. Without them, one compromised pod can often talk to everything.

Before you start, confirm your CNI enforces policies. The Kubernetes docs are explicit that you need a compatible plugin for enforcement, see Kubernetes Network Policies. If enforcement is missing, your YAML applies cleanly and does nothing, which is a painful failure mode.

NetworkPolicy checklist (with risk and impact):

- Default-deny ingress per namespace: Stops opportunistic lateral scans (impact: reduces worm-like spread).

- Default-deny egress per namespace: Blocks data exfil and surprise dependencies (impact: fewer outbound paths).

- Allow DNS explicitly: Avoids outages while keeping egress tight (impact: stable rollouts).

- Isolate namespaces by label: Keeps “dev” pods from reaching “prod” services (impact: fewer cross-environment leaks).

- Validate with traffic, not hope: Prevents false confidence (impact: avoids both outages and silent exposure).

A safe baseline is “deny all, then allow what’s needed.” This recipe shows the default-deny pattern: NetworkPolicy default deny all traffic.

Copy-pastable policy shapes (line-by-line):

Default deny ingress and egress for a namespace:

apiVersion: networking.k8s.io/v1kind: NetworkPolicymetadata: { name: default-deny, namespace: payments }spec: { podSelector: {}, policyTypes: ["Ingress","Egress"] }

Allow DNS egress (adjust labels for your DNS setup):

apiVersion: networking.k8s.io/v1kind: NetworkPolicymetadata: { name: allow-dns, namespace: payments }spec:podSelector: {}policyTypes: ["Egress"]egress:- to: [{ namespaceSelector: { matchLabels: { kubernetes.io/metadata.name: kube-system } } }]ports: [{ protocol: UDP, port: 53 }, { protocol: TCP, port: 53 }]

Validation commands that catch mistakes early:

- Confirm policies select pods:

kubectl describe netpol -n payments default-deny - Test from a pod:

kubectl exec -n payments deploy/api -- nslookup kubernetes.default.svc.cluster.local - Probe blocked paths:

kubectl exec -n payments deploy/api -- curl -m 2 http://10.0.0.10:8080

After default-deny, DNS is the first thing that breaks. Add the DNS allow rule immediately, then iterate on app flows.

Conclusion

Hardening Kubernetes in 2026 is still about the basics done well: least privilege, strong secret handling, and restricted pod-to-pod traffic. When these three controls work together, attackers lose the easy paths, and incidents stay contained.

Pick the triage items, prove them with tests, then turn your notes into a living kubernetes hardening checklist that ships with every cluster change.